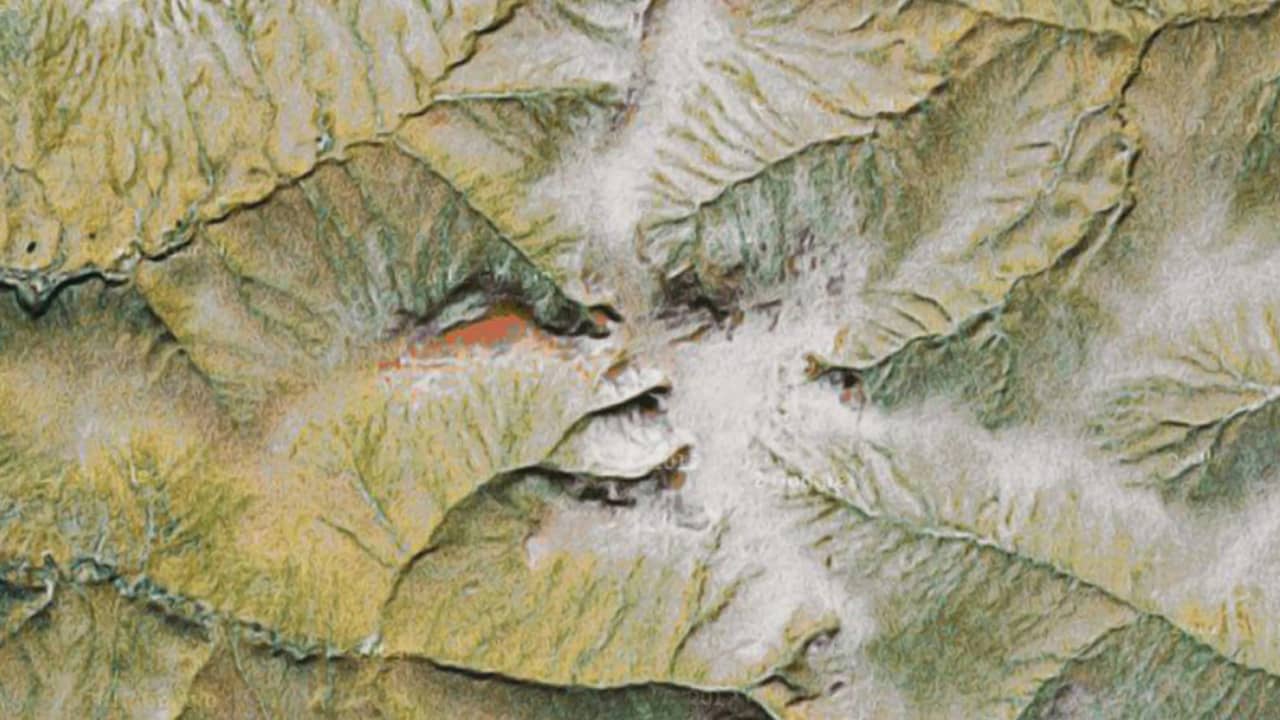

An algorithmic robot hovering over the world to spot portraits hidden in the topography on planet earth. The custom application works autonomously to process vast amounts of satellite images through Google Maps by using a face detection algorithm. This endless cycle produces interesting results for reflection on the natural world.

Objective investigations and subjective imagination collide into one inseparable process in the human desire to detect patterns. The tendency to detect meaning in vague visual stimuli is a psychological phenomenon called pareidolia.

»Google Faces« explores how the cognitive experience of pareidolia can be generated by a machine. By developing an algorithm to simulate this occurrence, a face tracker continuously searches for figure-like shapes while hovering above landscapes of the earth. Primary inspiration for the project was found in the “Face on Mars” image taken by the Viking 1 spacecraft on July 25th, 1976.

One of the key aspects of »Google Faces« is the autonomy of the face searching agent and the impressive amount of data it can investigate. The agent flips through satellite images, provided by Google Maps, to feed landscape samples to the face detection algorithm. A corresponding calculation moves sequentially along the latitude and longitude of the earth and once the face tracker has circumnavigated the globe, it switches to a zoom level and starts all over again. As the step-size for each iteration continuously decreases, the amount of images scanned and travel time of the device increases exponentially.

The face tracker provides versatile and astonishing results as it endlessly travels the world. Some of the detected images are not ideal as it is not possible for the human eye to recognize any visible face-like patterns. Other satellite figures inspire the imagination in a tremendous and often comical way. The search continues as our diligent robot perseveres its investigation.